| ABSTRACT | INTRODUCTION | METHODS | RESULTS | FILES |

|

Fusion of Differently Exposed Image Sequences | |

|

Ron Rubinstein Supervisor: Alexander Brook | |

ABSTRACT This document provides an outline of our project. For a more thorough discussion see the project report. Real-world scenes often exhibit very high dynamic ranges, which cannot be captured by a sensing device in a single shot. Nor can an imaging device successfully reproduce such a scene. To overcome this, these scenes must be represented as a series of differently exposed images, representing different sub-bands of the complete dynamic range. In this project we implemented an algorithm for fusing such image sequences to a single low dynamic range image, which may be displayed on a standard device. The fusion process accumulates all the details, which initially span many images, in a single image. The algorithm is simple, highly stable and computationally efficient. It may be applied to both gray-scale and full-color images. |

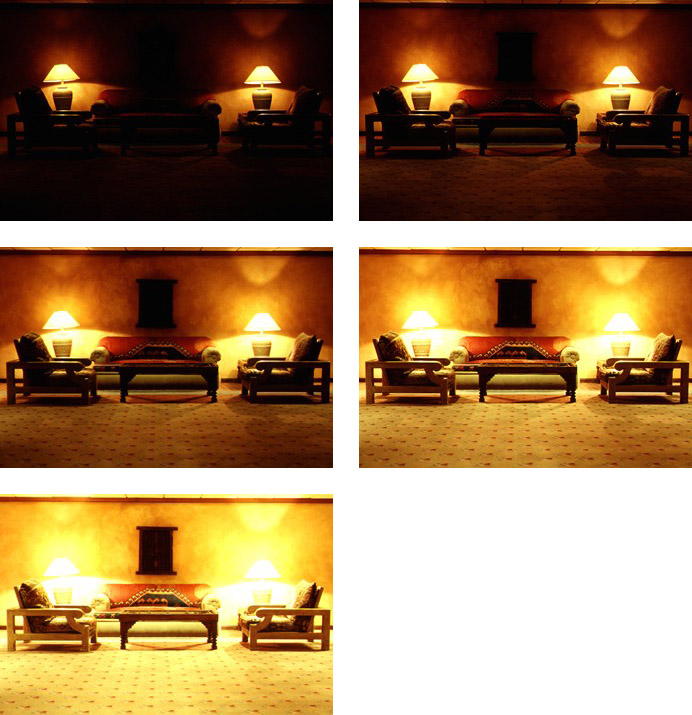

I. INTRODUCTION The full dynamic range of a real-world scene is generally much larger than that of the sensing devices used to capture it, as well as the imaging devices used to reproduce it. When a large dynamic range must be processed using a limited-range device, one is forced to split the dynamic range into several smaller "strips", and handle each of them separately. This process produces a sequence of images of the same scene, covering different portions of the dynamic range. When capturing a high dynamic range (HDR) scene, the sequence is obtained by varying the exposure settings of the sensor. When reproducing an HDR scene, the sequence is obtained by splitting the full range of the image into several sub-ranges, and displaying each separately. In both cases, we make use of a variable-exposure image sequence. Figure 1 presents a sample image sequence. The leftmost image is the most under-exposed image, and the rightmost one is the most over-exposed. The major drawback of such an image sequence is usability; since details span several images, the scene is difficult to interpret by both man and computer (since most visual applications assume a single image containing all the details). It is therefore imperative to develop techniques for merging (fusing) such image sequences into single, more informative, low dynamic range (LDR) images, maintaining all the details at the expense of brightness accuracy. In this project, we implemented a fusion algorithm based on Laplacian pyramid representations[1][2]. The Laplacian pyramid is an over-complete, multi-scale representation, in which each level roughly corresponds to a unique frequency band. Our implementation is based on a simple maximization process in the Laplacian pyramid domain, and provides an effective way of producing an informative high-detail image from the sequence of differently exposed images.Our method may be applied to both gray-scale as well as full-color images.Possible uses for our method include -

|

II. METHODS Laplacian Pyramids. The Laplacian pyramid representation was introduced by Burt and Adelson[1] in 1983, and is accepted today as a fundamental tool in image processing. The Laplacian pyramid is derived from the Gaussian pyramid representation, which is basically a sequence of increasingly filtered and downsampled versions of an image (see for example figure 2). The set of difference images between the sequential Gaussian pyramid levels, along with the first (most downsampled) level of the Gaussian pyramid, is known as the Laplacian pyramid of an image. The difference levels are commonly referred to as the detail levels, and the additional level as the approximation level. An example Laplacian pyramid is shown in figure 3. The Laplacian pyramid transform is specifically designed for capturing image details over multiple scales. Fusing Variable-Exposure Image Sequences. The core of the fusion process is a simple maximization in the Laplacian domain. The result of the Laplacian-domain fusion is post-processed to enhance its contrast and detail visibility. Given the variable-exposure sequence, the entire fusion algorithm is outlined by the following steps:

Motion Compensation. The images in the input sequence may not always be fully aligned (for instance when the sequence is shot using a hand-held device). In these cases the fusion will result in a "fuzzy" or "blurry" image. Obviously, it is essential to align all the images prior to fusion. As a part of this project, we have implemented a preprocessing unit which detects the global motion between the images, and compensates for this motion. Our method assumes a global translation-and-rotation motion model. It is based on the multi-scale version of the well-known Lucas-Kanade sub-pixel motion estimation algorithm[3], adapted for handling varying exposure conditions by replacing the Gaussian pyramid used in the classic version of the algorithm with a Laplacian pyramid.

|

III. RESULTS Some sample results of the fusion process are presented in figures 4, 5 and 6. All results were produced automatically, using the default settings. As can be seen, the resulting images contain all the details appearing in the input sequences, with no added artifacts or visible noise amplification. Color information is not entirely preserved, but its quality is acceptable. Additional examples may be found in the files section.

|

|||||||||||||||||||||

V. FILES The complete project report is available for download: Many fusion examples are available below. These include several sets of real photos taken during my trip to London (all of which were taken by hand, without the use of a tripod). The collection can be downloaded as a single compressed file: |

ACKNOWLEDGEMENT I would like to thank my supervisor, Mr. Alex Brook, whose dedicated guidance, assistance and support made this whole project possible. Many thanks to Mr. Eyal Gordon for his enlightening ideas, help and interest in the project, and finally to Dr. Michael Elad for his informative comments and advice. |